Part I

Foundations and Communication

Understanding what GenAI is, how it works, and how humans interact with it

Chapter 1: Foundations of Generative AI

Generative Artificial Intelligence (GenAI) represents a paradigm shift in how machines can create and innovate. This chapter lays the groundwork by defining GenAI, tracing its historical development, explaining its operational principles, and introducing the diverse array of models that power its capabilities.

1.1 What is Generative AI and its History

Generative AI refers to artificial intelligence systems capable of creating novel content—such as text, images, audio, or video, by learning patterns from existing data. While the concept has roots in early AI research, its recent prominence, particularly since 2022-2023, marks a significant leap forward in AI's creative potential.

The journey of Generative AI spans decades. Early explorations include chatbots like ELIZA in the 1960s, which simulated conversation. The development of neural networks through the 1980s and 1990s laid crucial groundwork, followed by the rise of deep learning in the 2000s. Key breakthroughs accelerated the field: Generative Adversarial Networks (GANs) were introduced by Ian Goodfellow and his colleagues in 2014, revolutionizing image generation. Diffusion models, which gradually refine noise into coherent data, began to emerge conceptually around 2015 and gained significant traction later. The release of OpenAI's GPT-3 (Generative Pre-trained Transformer 3) in 2020, and subsequently GPT-3.5 (powering early versions of ChatGPT) in late 2022, demonstrated unprecedented language capabilities. This led to an explosion of diverse GenAI models and applications starting in 2023, a period noted by McKinsey as one of rapid expansion and adoption (McKinsey, 2025, p. 16, Exhibit 8).

A common question is why generative AI is surging now. Its recent emergence is fundamentally driven by a convergence of three critical factors:

- Sophisticated AI Model Architectures: Innovations like the Transformer architecture have enabled models to understand and generate complex patterns with greater accuracy.

- Vast Datasets: The digital age has produced an unprecedented amount of data (text, images, code), crucial for training these large models. Notably, IDC estimates that 90% of a company's data is unstructured (Shelf & ViB, 2025, p. 7), highlighting the rich, albeit challenging, resource available for GenAI.

- Exponential Increase in Computing Power: Advances in GPUs and distributed computing have made it feasible to train models with billions or even trillions of parameters.

Generative AI's applications are diverse and rapidly expanding. According to McKinsey's 2025 "The State of AI" report, organizations are most commonly using GenAI to create text outputs (63% of respondents), followed by images (36%) and computer code (27%) (McKinsey, 2025). Broadly, applications span:

- Text Generation: Large language models create contextually relevant text for dialogue (chatbots), explanation, summarization, content creation, and translation.

- Image Generation: Techniques like GANs and Diffusion models produce high-quality, realistic, or artistic images used in art, design, advertising, and entertainment.

- Audio Generation: Creating music, synthesizing voices for text-to-speech applications, generating sound effects, finding use in media, entertainment, and education.

- Video Generation: Translating text descriptions or images into dynamic videos for fields like art, entertainment, education, marketing, and healthcare.

- Code Generation: Assisting software development by producing code snippets, functions, or even entire programs, aiding in debugging, testing, and rapid prototyping.

- Data Generation and Augmentation: Creating synthetic data to train other AI models where real-world data is scarce or private (e.g., in healthcare), or augmenting existing datasets to improve model robustness. This is particularly relevant for gaming, autonomous driving, and more.

- Virtual World Creation: Generating realistic environments, characters, and assets for gaming, simulations, entertainment, education, and metaverse platforms.

1.2 How Does Generative AI Work?

Understanding the mechanics of generative AI can be simplified through analogy. Consider training a dog: the task might be teaching it to press a button upon hearing a specific command. This process involves:

- Data Collection: The command (input) and the desired button press (output).

- Training Process: Repeated commands, with rewards (e.g., treats) for correct actions.

- Learning: The dog associates the command with the action, learning to ignore irrelevant factors (e.g., tone of voice, background noise, if not part of the signal).

- Iterative Improvement: The dog gets better with practice, perhaps with added distractions to test robustness.

- Testing and Deployment: Testing in new situations leads to reliable deployment (the dog pressing the button consistently on command).

Large language models (LLMs), a cornerstone of generative AI, operate through a more complex but conceptually similar process involving sophisticated architecture, extensive training, and inference (generation).

- Architecture: Based primarily on the Transformer architecture, LLMs utilize self-attention mechanisms across multiple layers, often containing billions of parameters (weights and biases that the model learns).

- Pre-training: LLMs undergo extensive pre-training on vast datasets, which can include internet text, books, articles, and code. They learn linguistic patterns, facts, reasoning abilities, and common sense knowledge through unsupervised learning—typically by predicting the next word (or token) in a sequence.

- Tokenization: Text is processed via tokenization, breaking it into manageable units like words or subwords.

- Contextual Understanding: Attention mechanisms are crucial, allowing the model to weigh the importance of different parts of the input text when making predictions, enabling it to understand context even over long sequences.

- Fine-tuning: After pre-training, models can be fine-tuned on smaller, more specific datasets to adapt them for particular tasks (e.g., medical text summarization, customer service responses) or to align them with desired behaviors (e.g., helpfulness, harmlessness).

- Inference: When given a prompt (input text), the model generates output text by repeatedly predicting the most likely next token, building up the response one token at a time.

- Zero-shot and Few-shot Learning: A remarkable capability of LLMs is their ability to perform tasks they weren't explicitly trained for (zero-shot) or with very few examples (few-shot), thanks to the general patterns learned during pre-training.

- Scaling Laws: The overall performance generally improves with scaling—increasing model size (number of parameters), dataset size, and the amount of computational resources used for training. Empirical studies, such as "Scaling Laws for Neural Language Models" by Kaplan et al. (2020, arXiv:2001.08361), demonstrate that performance scales predictably with increases in these factors, highlighting the importance of scaling them in tandem for optimal results.

The foundation of GenAI's recent success rests firmly on these three pillars: the evolution of sophisticated Models, the availability of massive amounts of Data (much of it unstructured), and the dramatic increase in Computing power. The SAS Generative AI Global Research Report emphasizes that while organizations expect GenAI successes, they often encounter stumbling blocks in implementation related to these areas, particularly in "increasing trust in data usage," "unlocking value," and "orchestrating GenAI into existing systems" (SAS, 2024). The quality and management of data, especially unstructured data which forms the bulk of enterprise information, is paramount. As the Shelf & ViB (2025, p. 7) report highlights, "Data quality is crucial for delivering trusted GenAl answers because ultimately the data becomes the answer."

1.3 Types of Generative AI Models

Generative AI encompasses a variety of models and techniques, each with unique strengths and applications. Key examples include Generative Adversarial Networks (GANs), Diffusion models, Transformers, Variational Autoencoders (VAEs), Retrieval-Augmented Generation (RAG), Recurrent Neural Networks (RNNs), Autoregressive Models, and Convolutional Neural Networks (CNNs), among others.

Large Language Models (LLMs), such as those powering ChatGPT (Chat Generative Pretrained Transformer), are predominantly based on the Transformer architecture. Developed by OpenAI, ChatGPT's primary purpose is generating human-like text responses conversationally. This is achieved by processing vast amounts of text data via unsupervised learning to grasp language patterns, grammar, facts, and even some reasoning capabilities. Modern GenAI, however, extends beyond text; multimodal models can process and generate content integrating multiple data types like images, audio, and text. Image generation, for instance, often involves models (like Diffusion models) learning to reverse a process of systematically adding noise to an image, enabling them to construct clear images from random noise during the generation phase.

Understanding specific model types can be aided by analogies:

- Generative Adversarial Networks (GANs): The Art Forgery Analogy. GANs feature a competitive dynamic between two neural networks: a Generator ("The Forger") that creates fake data (e.g., images) and a Discriminator ("The Detective") that tries to distinguish the fake data from real data. This adversarial process, where both networks improve over time, pushes the Generator to produce highly realistic outputs that are often indistinguishable from authentic data. (More details can be found at O'Reilly: https://www.oreilly.com/content/generative-adversarial-networks-for-beginners/)

- Diffusion Models: The Dust Cloud Analogy. These models learn by first taking clean data and gradually adding noise (like dust obscuring a picture) until it's essentially random. They then train a neural network to reverse this process, step-by-step. To generate new data, they start with pure noise and progressively "de-noise" it, guided by the learned reversal process, into a coherent and high-quality output. This allows for precise control over the generation process. (LeewayHertz provides an overview: https://www.leewayhertz.com/diffusion-models/)

- Transformer Models: The Orchestra Analogy. Fundamental to LLMs like GPT, Transformers use an "attention mechanism" (like an "Orchestra Conductor") to weigh the importance of different parts of the input sequence (the "musicians" or words) when generating an output. This allows the model to understand long-range dependencies and context within the text. "Multi-head attention" is like having multiple conductors, each focusing on different aspects of the "music" (text), leading to a richer and more nuanced understanding. (NVIDIA explains Transformers: https://blogs.nvidia.com/blog/what-is-a-transformer-model/)

- Variational Autoencoders (VAEs): The Compression/Decompression Analogy. VAEs consist of an encoder that compresses input data into a lower-dimensional, continuous latent space (a compact representation) and a decoder that reconstructs the original data from this latent representation. The "variational" aspect introduces a probabilistic approach to the encoding, ensuring the latent space has good properties that allow for generating new, similar data points by sampling from this space. They are useful for both data compression and creative generation. (IBM discusses VAEs: https://www.ibm.com/think/topics/variational-autoencoder)

- Retrieval-Augmented Generation (RAG): The Librarian and Author Analogy. RAG models enhance the generation capabilities of LLMs by first retrieving relevant information from an external knowledge base (the "Librarian" finding the right books or documents) based on the user's prompt. This retrieved information is then provided as context to the LLM (the "Author"), which uses it to generate a more accurate, up-to-date, and contextually grounded response. This is particularly useful for mitigating hallucinations and incorporating domain-specific or recent information. The Shelf & ViB report (2025, p. 7) notes RAG as a key strategy for leveraging an organization's (often unstructured) data. (AWS explains RAG: https://aws.amazon.com/what-is/retrieval-augmented-generation/)

The development of GenAI is extraordinarily rapid, with models constantly evolving in capability and efficiency. Performance benchmarks from platforms like the Chatbot Arena (https://chat.lmsys.org/?arena), which uses Elo ratings based on human preferences, offer valuable insights into the comparative strengths of leading models (e.g., OpenAI's GPT 5, Anthropic's Claude Sonnet 4.0 & Opus 4.1, Google's Gemini 2.5, Mistral AI's models) across various tasks. This dynamic landscape reflects a diversifying ecosystem with both large, proprietary models and increasingly powerful open-source alternatives. The SAS report (2024, p. 24) aptly notes, "LLMs alone do not solve business problems. GenAI is nothing more than a feature that can augment your existing processes, but you need tools that enable their integration, governance and orchestration."

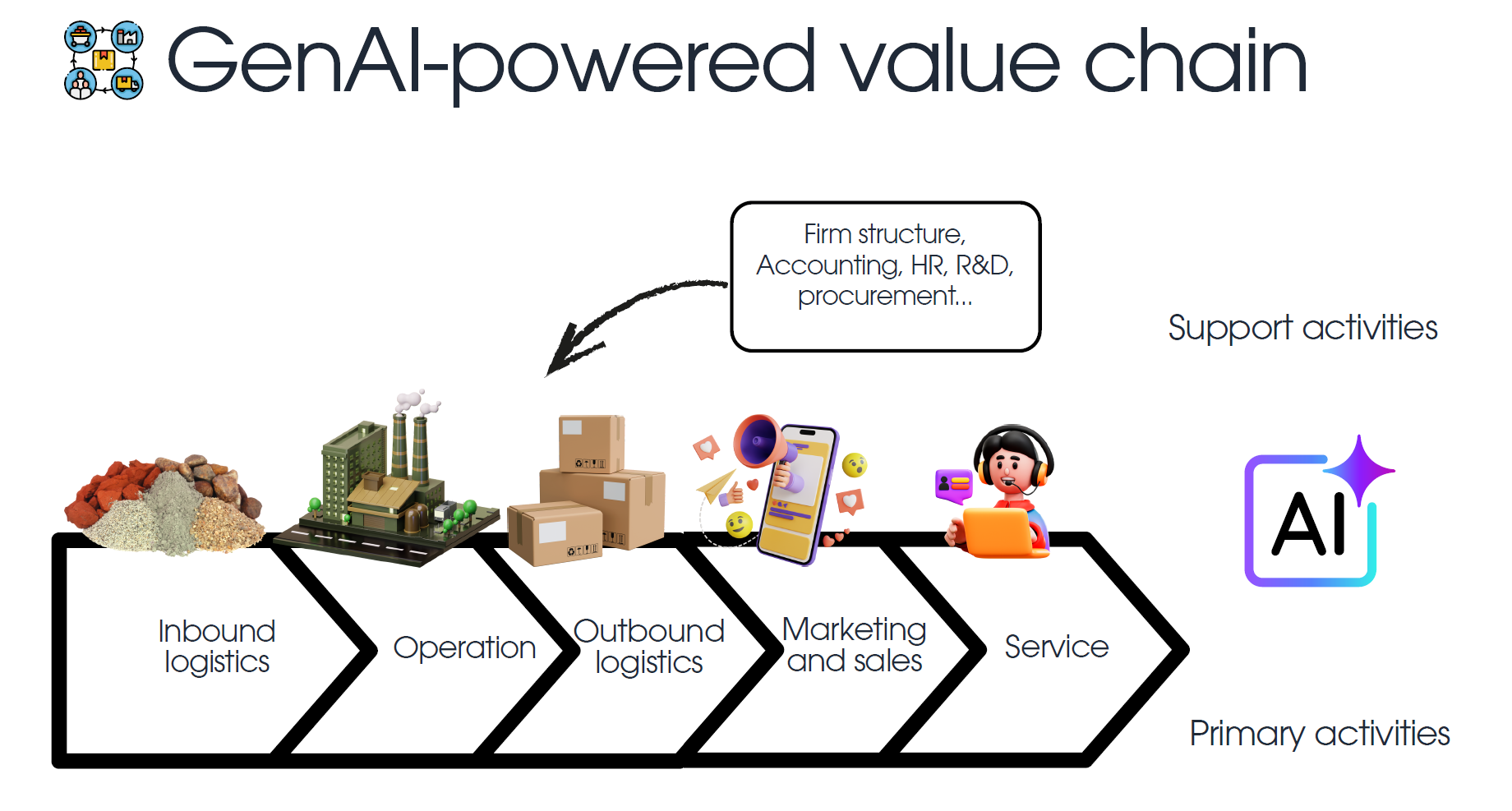

Chapter 2: Business Integration of Generative AI

The integration of Generative AI into business operations is no longer a futuristic concept but a present-day imperative for organizations seeking to innovate, enhance efficiency, and gain a competitive edge. This chapter explores the strategic levels at which GenAI can be adopted, examines its transformative impact on business models and value creation, and works on the critical challenges and considerations organizations must navigate for successful implementation.

2.1 Four Levels of Integration

Integrating Generative AI into business operations can be approached at different strategic levels, each presenting unique complexities, costs, and potential for competitive advantage. Drawing from strategies observed in practice and insights from research (e.g., Scott Cook, Andrei Hagiu, and Julian Wright in Harvard Business Review, January-February 2024, "Manage Generative AI by Strategizing at Four Levels"), four key levels emerge:

Level 1: Adopt Publicly Available Tools. This entry-level approach involves using standard, off-the-shelf GenAI tools like public chatbots (e.g., GPT 5, Claude Sonnet 4.0), image generators (e.g., Midjourney, Gemini nano banana), video editors (e.g., Gemini Veo), or coding assistants. The primary aim is often to improve internal process efficiency, such as drafting emails, summarizing documents, or generating initial creative ideas.

- Complexity & Cost: Low. Easy to implement with minimal upfront investment.

- Customization: None for the underlying AI models themselves.

- Competitive Advantage: Often temporary, as these tools quickly become widely adopted and can be considered "table stakes."

- Considerations: Relying on third-party tools can raise data privacy and security concerns, especially if sensitive company information is inputted. The SAS report (2024, p. 6, 9) highlights that 76% of organizations are concerned about data privacy and 75% about security with GenAI.

Level 2: Customize Existing Tools. Organizations at this level tailor readily available AI tools to their specific needs. This typically involves leveraging APIs provided by model developers (e.g., OpenAI API, Anthropic API) or fine-tuning pre-trained models with proprietary company data and know-how. This allows for the creation of customized AI solutions that can enhance customer experiences (e.g., personalized support bots), add unique capabilities to products (e.g., AI-powered features), and potentially improve user interfaces through personalization.

- Complexity & Cost: Moderate. Requires technical expertise for API integration and fine-tuning, along with data preparation efforts.

- Customization: High for application, moderate for the core model (fine-tuning adapts an existing model).

- Competitive Advantage: Can be significant if the customization leverages unique data or addresses specific customer needs effectively.

- Considerations: Data governance for fine-tuning data is crucial. The Shelf & ViB report (2025, p. 11) notes that 57% of companies are "fine-tuning on your data" to address unstructured data issues, indicating this is a common approach.

Level 3: Create Automatic Data Feedback Loops. A more advanced strategy involves designing systems where the outputs and user interactions generated by AI tools automatically feed back into the system, continuously refining the model, associated processes, or knowledge bases with minimal human intervention. This requires redesigning products or services to deeply integrate AI and to capture reliable signals from customer usage that drive ongoing improvement. Such feedback loops can establish a compounding competitive advantage that is difficult for others to replicate, as the AI system becomes increasingly "smarter" and more attuned to the specific domain through usage.

- Complexity & Cost: High. Requires significant engineering effort, data infrastructure, and a strategic approach to product design.

- Customization: Very high, focusing on the entire ecosystem around the AI.

- Competitive Advantage: Potentially very strong and sustainable, creating a "data moat."

- Considerations: Ethical implications of data collection and algorithmic bias need careful management. Continuous monitoring is essential.

Level 4: Develop Proprietary Models. The most sophisticated and resource-intensive level entails building unique generative AI models from the ground up (foundation models) or significantly modifying existing open-source architectures, tailored specifically to address core business problems using internal data and expertise. This approach might be pursued by companies with unique data advantages or specific needs that off-the-shelf or fine-tuned models cannot meet.

- Complexity & Cost: Extremely high. Demands significant AI research talent, massive computational resources, and extensive datasets.

- Customization: Maximum flexibility and control over the model architecture and training process.

- Competitive Advantage: Can be substantial and highly sustainable if the model provides a breakthrough capability or solves a unique, high-value problem.

- Considerations: Very few companies have the resources for this. Success is not guaranteed, and it involves long development cycles. The McKinsey report (2025, p. 8, Singla commentary) advises organizations to "think big" and aim for "wholesale transformative change," which could align with this level for some.

As McKinsey's 2025 report observes, organizations are still in the "early days" but are actively "redesigning workflows, elevating governance, and mitigating more risks" as they move through these levels (McKinsey, 2025, p. 2).

2.2 Business Models and Value Creation

Generative AI holds immense potential to reshape business models and unlock new avenues for value creation across industries and functions. Its impact is increasingly evident in workplace trends, productivity metrics, and strategic organizational shifts. The McKinsey (2025, p. 2) report title itself, "The state of AI: How organizations are rewiring to capture value," underscores this focus.

Perceived Potential and Adoption Trends: The adoption of AI, including GenAI, is rapidly increasing. McKinsey (2025, p. 15) found that 78% of survey respondents reported their organizations use AI in at least one business function in July 2024, up from 72% in early 2024 and 55% a year prior. Specifically for GenAI, 71% of respondents stated their organizations regularly use it in at least one business function, a jump from 65% in early 2024 (McKinsey, 2025, p. 17). This rapid uptake signals a broad recognition of GenAI's potential. The SAS report (2024, p. 27) similarly notes that over half (54%) of businesses have begun to implement GenAI, with 86% investing in it in 2024 or planning for 2025.

Enterprises are committing significant resources to GenAI across various functions. The Shelf & ViB (2025, p. 3, 6) survey found the highest levels of GenAI commitment (planning, PoC, deployed, or scaling) in software development (87%), data management/BI/analytics (86%), and operations/process automation (83%).

Productivity and Output Quality Gains: Concrete evidence supports GenAI's ability to boost productivity and output quality. Studies have shown significant improvements:

- Software engineers coding up to twice as fast using AI assistance (Peng et al., 2023, "The Impact of AI on Developer Productivity: Evidence from GitHub Copilot").

- Consultants completing tasks 25% faster with 40% higher quality output when using GenAI tools (Dell'Acqua et al., 2023, "Navigating the Jagged Technological Frontier: Field Experimental Evidence of the Effects of AI on Knowledge Worker Productivity and Quality").

- Writing tasks being finished up to twice as quickly (Noy and Zhang, 2023, "Experimental Evidence on the Productivity Effects of Generative Artificial Intelligence").

Beyond speed and quality, benefits often extend to enhanced creativity and job satisfaction. The SAS report (2024, p. 4) found that among organizations embracing GenAI, 89% reported improved employee experience and satisfaction, 82% noted savings on operational costs, and 82% stated higher customer retention. The primary outcome targeted by companies for GenAI, according to Shelf & ViB (2025, p. 3, 7), is improving operational efficiency (61% of respondents).

Revenue and Cost Impacts: Organizations are beginning to see tangible financial benefits. McKinsey's latest survey (2025, p. 22-23) indicates an increasing share of respondents reporting value creation, with larger shares than in early 2024 stating their GenAI use cases have increased revenue and led to cost reductions within deploying business units. For instance, in the second half of 2024, 70% of those using GenAI in strategy and corporate finance reported revenue increases, and 61% using it in supply chain and inventory management reported cost decreases.

Accelerated Employee Development: Research by Brynjolfsson, Li, and Raymond (2023, NBER WP 31161, "Generative AI and Firm-Level Productivity Growth") demonstrated that AI assistance significantly helped newer customer support agents improve their performance, effectively reducing the learning curve and boosting resolutions per hour by up to 14%. This suggests GenAI can democratize expertise and accelerate onboarding.

Strategic Rewiring for Value Capture: To fully harness GenAI, organizations are undertaking significant changes. McKinsey (2025, p. 2) highlights that companies are "redesigning workflows, elevating governance, and mitigating more risks." Indeed, 21% of respondents using GenAI reported their organizations have fundamentally redesigned at least some workflows (McKinsey, 2025, p. 4). This often requires top-down C-suite commitment and leadership, as emphasized by Alexander Sukharevsky: "Effective AI implementation starts with a fully committed C-suite and, ideally, an engaged board" (McKinsey, 2025, p. 4).

2.3 Challenges and Considerations in GenAI Integration

While the potential of GenAI is vast, its successful integration is fraught with challenges that organizations must proactively address. These span data quality, governance, strategic alignment, technological hurdles, and talent acquisition.

1. Data Quality and Unstructured Data Management: The adage "garbage in, garbage out" is acutely relevant for GenAI. The quality of the data used to train and prompt these models directly determines the quality and reliability of their outputs.

- Scale and Impact of Unstructured Data Issues: The Shelf & ViB (2025) survey reveals the magnitude of this challenge: 85% of organizations manage over 1 million documents and files, with 51% handling over 10 million (p. 3, 8). Crucially, 92% of participants indicated that unstructured data issues impacted their GenAI initiatives, with 30% describing this impact as "large" or "significant" (p. 4, 8).

- Prevalence of Problematic Data: 68% of respondents in the Shelf & ViB (2025, p. 4, 9) survey said that more than half of all their files had at least one issue, and 42% stated that over 70% of their documents and files had an issue that could hinder GenAI success.

- Common Data Issues: The most frequent problems include duplicate files and multiple versions (66%), out-of-date information (53%), and conflicting versions of files (47%) (Shelf & ViB, 2025, p. 4, 10).

- Primary Data Sources: SharePoint is the primary source of unstructured data for GenAI initiatives (67% of respondents), followed by email (46%) and Microsoft OneDrive (45%) (Shelf & ViB, 2025, p. 4, 9), indicating a reliance on common enterprise systems that may not have been designed with GenAI in mind.

- Addressing Data Issues: While 74% plan to leverage unstructured data despite issues, 55% are planning to address these issues in the next 12-24 months (Shelf & ViB, 2025, p. 4, 10-11). Common approaches include fine-tuning models on existing data (57%) and adding new data management/quality solutions (48%) (Shelf & ViB, 2025, p. 11). The SAS report (2024, p. 11, Insight 1) notes, "Data management and analytics tools can detect outliers and sources of bias in the raw data used to feed LLMs."

2. Governance, Risk, and Compliance (GRC): Effective governance is paramount to manage the risks associated with GenAI, including bias, misinformation (hallucinations), IP infringement, and security vulnerabilities.

- Lack of Preparedness: The SAS report (2024, p. 6) found that only one in ten organizations has undergone the preparation needed to comply with GenAI regulations, and a staggering 95% lack a comprehensive governance framework for GenAI.

- Key Concerns: Data privacy (76%) and security (75%) are top concerns for organizations using GenAI (SAS, 2024, p. 6, 9). McKinsey's survey (2025, p. 7) also shows increasing mitigation efforts for inaccuracy, intellectual property infringement, and privacy risks.

- Monitoring Deficiencies: Only one in twenty organizations (5%) has a reliable system to measure bias and privacy risk in LLMs, and seven in ten (71%) are not able to continuously monitor their GenAI systems (SAS, 2024, p. 6, 11, 12). McKinsey (2025, p. 6) found that only 27% (User Note: Text seems to end here in provided content)

- Leadership Oversight: CEO oversight of AI governance is correlated with higher bottom-line impact. McKinsey (2025, p. 3) reports that 28% of organizations using AI say their CEO is responsible for overseeing AI governance.

- Centralization Models: Risk and compliance, along with data governance for AI, tend to be more centralized, whereas tech talent and adoption of AI solutions are often managed via a hybrid model (McKinsey, 2025, p. 5).

3. Strategic Alignment and Organizational Understanding: A clear strategy and widespread understanding are vital for effective GenAI deployment.

- Understanding Gap: 93% of senior tech decision-makers admit they do not fully understand GenAI or its potential impact on business processes (SAS, 2024, p. 15).

- Prioritization and Best Practices: 66% of companies lack a standard process for prioritizing GenAI use cases (Shelf & ViB, 2025, p. 3, 7). Furthermore, less than one-third of organizations report following most of the 12 key adoption and scaling best practices for GenAI (McKinsey, 2025, p. 9).

- Usage Policies: 39% of organizations do not have a GenAI usage policy in place for their staff (SAS, 2024, p. 15, 16).

4. Technological Integration and Tools: Integrating GenAI into existing systems and workflows can be a significant technical hurdle.

- Tooling and Compatibility: Almost half (47%) of decision-makers report lacking appropriate tools to implement GenAI, and 41% experience compatibility issues when combining GenAI with current systems (SAS, 2024, p. 19).

- Data Set Obstacles: 52% encounter obstacles in using public and proprietary datasets effectively (SAS, 2024, p. 19).

- Technological Limitations as a Barrier: Over a third (34%) state that the biggest challenge to monitoring GenAI is technological limitations (SAS, 2024, p. 19, 21).

5. Talent and Skills: The demand for GenAI-proficient professionals often outstrips supply, although this is evolving.

- In-House Skills Gap: Half of organizations (51%) are concerned they do not have the in-house skills to use GenAI effectively, and 39% have found insufficient internal expertise to be an obstacle to implementation (SAS, 2024, p. 25).

- Evolving Hiring Landscape: McKinsey (2025, p. 11-12) notes that organizations are hiring for new risk-related roles like AI compliance specialists and AI ethics specialists. While hiring for AI-related roles remains challenging, the difficulty has somewhat eased compared to previous years for many roles, with the notable exception of AI data scientists, who remain in high demand.

- Reskilling Efforts: Organizations are increasingly focusing on reskilling their workforce for AI, with many expecting to undertake more AI-related reskilling in the next three years than in the past year (McKinsey, 2025, p. 13).

2.4 The Future of Work with Generative AI

Generative AI is not just a technological advancement; it is a catalyst for fundamental changes in how work is performed, how teams collaborate, and how individuals conceptualize their careers.

- Solopreneurship and Democratization of Tools: The accessibility of powerful GenAI tools is lowering barriers to entry for individuals to start and scale businesses, potentially leading to a rise in solopreneurship and small, agile enterprises.

- Human-GenAI Collaboration (Copilots): The dominant paradigm is shifting towards humans working alongside AI in "copilot" scenarios. AI assists with tasks like drafting, research, coding, and analysis, augmenting human capabilities rather than fully replacing them. McKinsey (2025, p. 19-20) data shows C-level executives are leading the charge in personal GenAI use, potentially modeling this collaborative approach.

- Importance of Human-GenAI Communication (Prompt Engineering): The ability to effectively communicate with GenAI models through well-crafted prompts (prompt engineering) is becoming a critical skill across many roles.

- Workflow Automation: GenAI is enabling deeper automation of workflows at both the company and individual levels. This goes beyond simple task automation to encompass more complex, multi-step processes.

- Emergence of AI Agents: The development of autonomous AI agents capable of understanding goals, planning, and executing complex tasks independently represents a significant future trend. These agents could manage projects, conduct research, or even run aspects of a business with minimal human oversight.

- Workforce Impact and Restructuring: While a plurality of respondents in McKinsey's survey (2025, p. 14) anticipate little immediate change to their workforce size due to GenAI, there are expectations of shifts. Decreased headcount is anticipated in functions like service operations and supply chain/inventory management, while increases are expected in IT and product/service development. The report also notes that headcount reductions, when they occur as a result of GenAI, are one of the organizational attributes with the largest impact on bottom-line value realized (McKinsey, 2025, p. 13).

- Shifting Skill Demands and Continuous Learning: As noted by Lareina Yee, "the difficulty of finding AI talent, while still considerable, is beginning to ease...the long-term workforce effects are still only beginning to take shape" (McKinsey, 2025, p. 15). Continuous learning and adaptation will be key for both individuals and organizations.

As Michael Chui concludes in the McKinsey report (2025, p. 24), "AI only makes an impact in the real world when enterprises adapt to the new capabilities that these technologies enable." This adaptation is an ongoing journey that will define the future of work.

Chapter 3: Communicating with GenAI

3.1 Human-GenAI Communication

The advent of powerful Generative AI (GenAI) models like Gemini 2.5, Claude Sonnet 4.0 & Opus 4.1, and GPT 5 marks a paradigm shift in how humans interact with technology. Beyond mere tools, these AIs are becoming collaborators, assistants, and even creative partners. Central to this evolving relationship is the skill of Human-GenAI communication: the ability to effectively convey intent, guide reasoning, and elicit desired outputs from these complex systems.

The Art and Science of Communicating with AI

In the burgeoning field of AI, the ability to communicate effectively with generative models is rapidly becoming a critical skill. It's not just about typing a question; it's about understanding how these models "think" (or rather, process information and predict sequences), how they interpret language, and how to structure your requests to navigate their vast knowledge and capabilities. This interaction is a two-way street: the AI must understand your needs, and you must learn the "language" that best elicits the AI's strengths. Mastering this communication is not merely beneficial but essential for unlocking the true potential of GenAI.

The importance of such skilled communication cannot be overstated, as it forms the bedrock of successful human-AI collaboration. It is the primary mechanism by which users translate nuanced human intent into explicit instructions that an AI can interpret and act upon. Many aspects of your communication affect its efficacy: the model you use, its training data, its configuration parameters, your word-choice, style and tone, structure, and the context you provide. This is why crafting effective communication is often an iterative process. Inadequate or ambiguous communication can lead to inaccurate, irrelevant, or even nonsensical responses, hindering the AI's ability to provide meaningful assistance and ultimately undermining its utility.

Conversely, effective communication transforms GenAI from a novelty into a powerful tool for diverse applications, ranging from creative content generation and complex problem-solving to nuanced summarization, translation, and sophisticated code development. By clearly defining the task, providing necessary context, and guiding the AI's 'reasoning' process, users can significantly improve the quality, relevance, and utility of the generated outputs. This precision not only maximizes the return on investment in GenAI technologies by ensuring outputs are fit for purpose but also helps in steering the models away from generating unintended biases or nonsensical 'hallucinations,' thereby fostering more reliable and trustworthy AI interactions. The practice of meticulously crafting these instructions is known as prompt engineering, a discipline explored in detail in the next section.

Some might argue that as AI models become "smarter," the need for careful prompting will diminish. While it's true that more advanced models can infer intent better from less precise inputs, the fundamental need for clear communication remains. Think of it this way: a less competent model might be like a bachelor's student, you need to be very explicit and provide a lot of guidance. An advanced model might be like a PhD student, it can understand more nuanced requests and even anticipate some of your needs. However, even with a PhD student, you still need to clearly articulate your research question, your desired methodology, and your expected outcomes to achieve the best results. Similarly, to unlock the full potential of any GenAI model, precise and thoughtful communication is paramount.

Interestingly, individuals with backgrounds in communication, literature, liberal arts, and languages may find themselves at an advantage. These disciplines cultivate skills in articulating complex ideas clearly, understanding nuance and subtext, structuring arguments, and appreciating the power of word choice – all of which are crucial for effective Human-GenAI interaction.

3.2 Prompt Engineering: Crafting Effective Inputs

Prompt engineering is the discipline of designing and refining the input (the "prompt") given to a Large Language Model (LLM) to guide it towards producing the desired output. It's a blend of art and science, requiring both creativity in phrasing and systematic testing of different approaches. Remember, an LLM is a prediction engine; it takes sequential text as input and predicts the most probable next token based on its training. Effective prompt engineering sets up the LLM to predict the right sequence of tokens for your specific task.

Core Concepts of Prompt Engineering:

Several core concepts underpin effective prompting:

- Clarity and Specificity (Instruction):

- Be Clear and Direct: Think of the AI as a new employee needing explicit instructions. Avoid ambiguity. State your request in brief, but specific language.

- Write Clear Instructions: The less the model has to guess, the better. If outputs are too long, ask for brevity; if too simple, ask for expert-level writing.

- Include Details: Provide important details or context. Otherwise, you leave it to the model to guess.

- Simple, Concise Language: Avoid jargon and overly complex sentences.

- Contextualization:

- Provide Context When Necessary: If the request relies on specific knowledge or a particular situation, include that information.

- Provide Reference Text (RAG principles): LLMs can invent fake answers. Providing trusted reference text (like from a database query or document) helps ground the model and reduce fabrications. Instruct the model to use this information.

- Role Assignment / Persona:

- Ask the Model to Adopt a Persona: Assigning a role (e.g., "You are a historian," "Act as a data scientist") can drastically improve output by tailoring tone, style, expertise, and format.

- Providing Examples (Few-Shot, One-Shot, Zero-Shot Learning):

- Zero-Shot: The simplest form, providing only the task description without examples. Relies on the model's pre-trained knowledge.

- One-Shot & Few-Shot: Providing one (one-shot) or multiple (few-shot) examples of the desired input-output pattern. This helps the model understand the task, format, and style. The number of examples depends on task complexity and model capability. Generally, 3-5 examples are a good start for few-shot.

- Quality of Examples: Examples should be relevant, diverse, high-quality, and well-written. A small mistake can confuse the model.

- Structuring Prompts:

- Use Delimiters: Clearly indicate distinct parts of the input (e.g., instructions, examples, context, text to be summarized) using markers like triple quotes, XML tags, or section titles.

- Utilize XML Tags: For structured responses, XML tags can enhance clarity and precision, helping the AI segregate elements of the task.

- Output Format Specification: Explicitly state the desired output format (e.g., bullet points, JSON, table, specific number of paragraphs).

- The Iterative Process (Prompt Development Lifecycle):

- Iterate: Prompt engineering is rarely a one-shot success. Expect to refine and tweak.

- A Common Prompt Development Lifecycle includes steps like:

- Define the Task and Success Criteria.

- Develop Test Cases (including edge cases).

- Engineer the Preliminary Prompt.

- Test Prompt Against Test Cases.

- Refine Prompt.

- Ship the Polished Prompt (and be ready for further iteration).

- Test Changes Systematically: A change might improve isolated examples but worsen overall performance. Define a comprehensive test suite ("eval").

- Document Attempts: Crucial for learning and debugging. Track prompt versions, model settings, and outcomes.

- LLM Output Configuration (Model Parameters):

- Output Length (Max Tokens): Controls how much text the model generates. More tokens mean more computation, cost, and potentially slower responses.

- Sampling Controls:

- Temperature: Controls randomness. Lower values (e.g., 0.1-0.2) for deterministic, factual tasks. Higher values (e.g., 0.7-0.9) for creative, diverse outputs. A temperature of 0 is "greedy decoding."

- Top-K: Selects from the K most likely next tokens.

- Top-P (Nucleus Sampling): Selects from the smallest set of tokens whose cumulative probability exceeds P.

- These settings interact. For example, at temperature 0, Top-K and Top-P become largely irrelevant.

Prompt Engineering Techniques Overview

The following table outlines various specific techniques. These techniques often build upon the core concepts discussed above.

| Technique | Description | Example | Applicable Scenarios |

|---|---|---|---|

| Zero-shot Prompting | This technique asks AI a question or gives a task without providing specific examples. It relies on the model's generalization and pretraining knowledge. Effective for general knowledge queries, but may have limited performance for domain-specific or complex tasks. | "Explain the concept of dark matter and its significance in cosmology." | Testing foundational knowledge, straightforward questions, assessing model generalization ability, and quick information retrieval. |

| Few-shot Prompting | Provides a few relevant question-answer examples before the main query to help the AI understand the task requirements and desired output format. Enhances performance for specific tasks requiring particular formats or styles. | Example 1: Q: What shape is the Earth? A: Spherical. Example 2: Q: What color is Mars? A: Red. Question: What is the size of Jupiter? | Tasks requiring specific formats or styles, helping AI understand specific requirements, and adapting to uncommon or new tasks. |

| Chain of Thought (CoT) | Encourages AI to think step-by-step like humans, showing the entire problem-solving process. Improves accuracy for complex problems and enhances output transparency and explainability. | "Calculate (27 × 14) + (35 × 12). Please explain your calculation process step by step." | Complex math or logical problems, tasks needing detailed reasoning, improving decision transparency, and teaching or instructional scenarios. |

| Tree of Thought (ToT) | An extension of Chain of Thought, allowing AI to explore multiple thinking paths like a decision tree. Useful for open-ended questions or tasks requiring creativity. | "Design a sustainable urban transport system. Propose at least three options, analyze environmental impact, costs, and social benefits, then recommend the best option." | Complex decision-making, tasks requiring multi-angle analysis, creative thinking, strategic planning, and scenarios needing multi-factor trade-offs. |

| Self-consistency | This method is also called majority vote. It is indeed quite clever. Generates multiple independent answers and selects the most common or reasonable one. Increases reliability and stability, especially for questions with multiple possible answers. | "Provide three independent explanations of how global warming affects sea level rise. Compare them and select the most comprehensive and scientific one." | Scenarios requiring high reliability, questions with multiple possible answers, reducing randomness, and improving solution quality for complex problems. |

| Prompt Templates | Creates standardized prompt structures with placeholders for specific content. Improves consistency and efficiency, especially for repetitive tasks. Ensures all necessary details are included while maintaining structure. | Template: "As a [profession], how do you view [topic]? Consider [factor 1] and [factor 2], and provide specific [suggestions/solutions]." Example: "As an environmental scientist, how do you view plastic pollution? Consider marine ecology and human health." | Tasks needing repeated execution, maintaining consistency and structure, and automating the creation of similar but varied prompts. |

| Role-playing Prompts | Requires AI to act as a specific role (e.g., expert, historical figure, or professional) to provide answers or complete tasks. Offers targeted and professional responses from a specific perspective. | "Assume you are Aristotle. Discuss the pros and cons of modern democracy from the perspective of ancient Greek philosophy." | Questions needing specific professional perspectives, simulating expert advice, exploring historical viewpoints, creative writing, and role modeling. |

| Step-by-step Prompting | Breaks complex tasks into a series of simpler steps, guiding AI step-by-step. Each step has clear instructions, ensuring the task is completed accurately and systematically. | "Let us design a mobile app step by step. First, define the app's main features." [Wait for response] "Good, next step: design the primary user interface elements." | Complex processes, teaching or instructional scenarios, multi-step task breakdowns, ensuring each task component receives attention. |

| Reverse Prompting | Asks AI to generate prompts that could lead to a specific output. Helps understand AI's reasoning, generate creative content, or optimize prompt strategies. | "Create a question whose answer is 'Photosynthesis is the process by which plants obtain energy.'" | Creative writing, understanding AI's associative logic, generating test questions, exploring AI knowledge structure. |

| AI Interview Technique | Simulates an interview process by asking AI a series of progressive questions to gather more detailed and accurate preferences. Each question builds on the previous response for in-depth exploration. | "I want to plan a trip. Please ask me questions to understand my preferences for this trip." | Suitable for scenarios where preferences are unclear, gathering detailed information. |

| Thought Provocation | Uses open-ended questions or hypothetical scenarios to stimulate deeper and more creative thinking. Encourages AI to explore novel ideas or solutions, exceeding conventional thinking. | "If humans suddenly gained the ability to read minds, how would society, the economy, and politics change?" | Creative thinking, hypothetical scenario analysis, exploratory problem-solving, stimulating innovative ideas. |

| Meta-prompting | Uses prompts to generate or refine other prompts. This advanced technique involves AI in the creation or optimization of prompts, improving quality and exploring new strategies. | "Design a prompt that effectively guides AI to generate an engaging opening for a historical novel." | Optimizing prompt strategies, exploring AI capabilities, generating task-specific prompts, improving AI system autonomy. |

Advanced Prompting Strategies

Beyond the foundational techniques, several advanced strategies can significantly enhance reasoning and task performance:

- Chain of Thought (CoT) Prompting: Encourages the LLM to generate intermediate reasoning steps before arriving at a final answer, mimicking human problem-solving. This improves accuracy, especially for complex tasks, and provides interpretability.

- Zero-shot CoT: Simply adding "Let's think step by step" can trigger this.

- Few-shot CoT: Providing examples that include the reasoning steps.

- Self-Consistency: An extension of CoT where multiple reasoning paths are generated (often by increasing temperature) for the same prompt, and the most common answer is selected. This improves robustness and accuracy.

- Tree of Thoughts (ToT): Generalizes CoT by allowing the LLM to explore multiple different reasoning paths simultaneously, like branches of a tree, evaluating them and deciding which path to pursue further. Useful for problems requiring exploration or strategic lookahead.

- Step-Back Prompting: Involves prompting the LLM to first consider a more general concept or principle related to the specific task, and then using that abstraction to inform the solution to the original, more specific problem. This helps the model activate broader knowledge.

- ReAct (Reason and Act): Enables LLMs to solve complex tasks by interleaving reasoning steps with action steps. Actions can involve using external tools (like a search engine or code interpreter) to gather information or perform calculations, which then feeds back into the reasoning process. This is a step towards agent-like behavior.

- Giving the Model Time to "Think" / Inner Monologue: Instruct the model to work out its own solution before rushing to a conclusion. For tasks where the reasoning process shouldn't be shared with the end-user (e.g., tutoring), an "inner monologue" can be used, where the reasoning is structured to be parsed out and hidden.

- Using External Tools / Retrieval Augmented Generation (RAG): Compensate for model weaknesses by feeding it outputs from other tools. A text retrieval system (RAG) can provide relevant documents, a code execution engine can run code and do math. Embeddingsbased search is key for efficient knowledge retrieval in RAG.

- Automatic Prompt Engineering (APE): Using an LLM to generate and refine prompts for another LLM or task. This involves generating candidate prompts, evaluating them (e.g., using metrics like BLEU/ROUGE or model-based evaluation), and selecting the best-performing ones.

- Code Prompting: Specific techniques for prompting LLMs to write, explain, translate, or debug code. This includes providing clear requirements, specifying the language, giving examples, and asking for explanations of generated code.

- Multimodal Prompting: Using multiple input formats (text, images, audio, code) to guide an LLM, depending on the model's capabilities.

Best Practices in Prompt Engineering

Synthesizing from expert guidance, here are crucial best practices:

- Be Clear, Concise, and Specific:

- Design with Simplicity: If it's confusing for you, it's likely confusing for the model.

- Be Specific About the Output: Don't be too generic. Specify format, length, style, content focus.

- Use Instructions over Constraints (where possible): Tell the model what to do rather than a long list of what not to do. Constraints are valuable for safety or strict formatting.

- Provide High-Quality Examples: This is often the most impactful practice. Show, don't just tell. Ensure examples are diverse and well-written. For classification in few-shot, mix up the classes in your examples.

- Structure Your Prompt:

- Use Delimiters: To separate instructions, context, examples, and user input.

- Experiment with Input Formats and Writing Styles: Questions, statements, or instructions can yield different results.

- Use Variables in Prompts: For reusability and dynamic input, especially in applications.

- Manage Model Output and Behavior:

- Control Max Token Length: To manage response length, cost, and latency.

- Experiment with Output Formats (e.g., JSON, XML): Structured output can reduce hallucinations and be easier to parse. JSON Repair libraries can help with malformed JSON.

- Working with Schemas (for JSON input/output): Providing a JSON schema for input helps the LLM understand data structure and focus attention.

- Iterate and Evaluate:

- Experiment and Iterate: Try different prompts, analyze results, and refine.

- Test Changes Systematically: Use comprehensive test suites ("evals") to ensure net positive improvements. Evaluate against gold-standard answers if available.

- Document Various Prompt Attempts: Keep detailed records of prompts, model settings (temperature, top-k, top-p, model version), and outputs. This is crucial for learning, debugging, and consistency.

- Adapt to Model Updates: Models evolve. Revisit and adjust prompts to leverage new capabilities or account for changes in behavior.

- Ask for Feedback (from the model itself in conversational contexts): Some advanced interfaces allow you to ask the model to improve your prompt.

- Consider the "Task" and "Who":

- Clearly Define the Task: What do you want the AI to do?

- Assign a Role/Persona: This helps tailor the AI's expertise, tone, and style.

General Source Reference

The insights and techniques discussed in this chapter are synthesized from comprehensive prompt engineering guides and documentation provided by leading AI research organizations and cloud providers, including OpenAI's "Prompt engineering" guide, Anthropic's "Claude Prompt Engineering" resources, Google's "Gemini for Google Workspace Prompting Guide 101," and Google Cloud's "Prompt Engineering" whitepaper by Lee Boonstra, among others. These sources offer in-depth explanations, practical examples, and evolving best practices for interacting effectively with large language models

3.3 Context Engineering: Building the Right Environment

While prompt engineering focuses on crafting the perfect instruction, context engineering represents the next evolution in human-AI interaction. It's about creating the optimal environment for AI models to think and act effectively—preparing the workspace with all necessary tools, references, and background information before the AI begins its work.

Understanding Context Engineering

Context engineering goes beyond writing clever prompts. Traditional prompt engineering emphasizes the right words, tone, and structure to guide model behavior. Context engineering takes a broader approach: providing everything the model needs to understand the situation comprehensively—conversation history, background documents, user preferences, available tools, and real-time data.

The fundamental principle is straightforward: AI models cannot read minds. Without proper context, even the most sophisticated prompt will yield suboptimal results. Context engineering addresses this limitation by ensuring the AI always has complete situational awareness.

Why Context Engineering Matters

Every AI model operates within a "context window"—essentially its short-term memory. Within this limited space, the model must balance your current question, previous messages, relevant facts, and task-specific data. Once this window reaches capacity, the model begins to forget earlier information.

As AI systems grow more sophisticated, prompts alone cannot carry the full burden of communication. A customer service bot must recall past interactions, access account data, and apply current company policies. A coding assistant needs to understand your entire project architecture, not just the individual file being edited. This is where context engineering becomes essential—transforming a single-turn chatbot into a long-term collaborative partner.

How Context Engineering Works

A context-aware AI system relies on several interconnected components that collectively shape what the model perceives and remembers:

- Information Sources:

Context originates from multiple locations: ongoing conversations, user profiles, documents, databases, APIs, available tools, and live data feeds. Each source contributes to the model's understanding of the current situation.

- Dynamic Assembly:

Rather than relying on static templates, context is constructed dynamically for each interaction. The system combines the most relevant, recent, and useful information specifically for that moment, ensuring the model always works with optimal inputs.

- Intelligent Selection:

Not all available information proves helpful. Effective context systems rank and filter what matters—employing semantic search to identify relevant data while discarding noise that might distract the model. This curation ensures focus and efficiency.

- Memory Management:

Short-term memory maintains the current exchange, while long-term memory preserves persistent information—user preferences, established facts, and lessons from past sessions. This dual-memory approach enables the AI to build continuity over time, creating truly personalized experiences.

- Format Optimization:

The presentation of context significantly impacts effectiveness. Concise, well-structured summaries outperform lengthy data dumps. Organized text is far easier for AI to process than unformatted information sprawl. Proper formatting maximizes the utility of limited context windows.

Implementing Context Engineering

Building robust context systems requires balancing memory limitations with performance demands. Four core strategies provide the foundation:

- Write: Create and Save Context

Provide your AI with a comprehensive notebook. Store system instructions (e.g., "You are a helpful legal assistant who writes concise summaries"), maintain scratchpads for temporary thoughts, and establish long-term memory to preserve key facts across sessions. This written context serves as the AI's reference library.

- Select: Retrieve What's Relevant

Extract only necessary information for each task. Retrieval-Augmented Generation (RAG) searches documents or databases to find fresh, specific information relevant to the current query—such as fetching the latest return policy before answering a customer's question. Effective systems also identify appropriate tools and prioritize context by importance or recency.

- Compress: Reduce Context Size

Preserve essential information while eliminating unnecessary details. Summarization condenses lengthy conversations into key points. Trimming removes outdated or irrelevant content. Entity extraction captures crucial names, dates, and facts for later reference. These compression techniques maximize the utility of limited context windows.

- Isolate: Partition Context

Separate different types of work to prevent interference. Multi-agent architectures can delegate tasks to specialized sub-agents, each maintaining its own focused context. Session separation keeps planning, coding, and debugging in distinct threads, preventing confusion and maintaining clarity across different work streams.

Practical Tactics for Context Engineering

Implementing context engineering doesn't require extensive resources. These practical habits can significantly improve AI interactions:

- Be Specific: Vague inputs invariably produce vague outputs. Precision in context leads to precision in results.

- Show Examples: Demonstrations consistently outperform abstract descriptions in conveying requirements.

- State Expectations Clearly: Explicitly communicate what's required, what's optional, and what constitutes quality output.

- Iterate Systematically: Start with minimal context, test thoroughly, then expand based on results.

- Keep Context Fresh: Regularly update reference materials to ensure accuracy and relevance.

- Log Everything: Track how the AI utilizes tools, retrieves data, and where errors occur. This documentation proves invaluable for optimization.

- Avoid Ambiguity: If an instruction permits multiple interpretations, the AI will likely choose unexpectedly.

- Balance Flexibility and Control: Determine when creative freedom serves your goals and when strict reliability is paramount.

Context Engineering vs. Prompt Engineering

Prompt engineering and context engineering are complementary disciplines, not competing approaches. Prompt engineering addresses what you say to the AI. Context engineering determines what the AI knows when you speak.

Consider prompt engineering as providing directions to a destination. Context engineering represents the GPS system—continuously updating with traffic conditions, detours, and your preferences. Perfect prompts cannot compensate for inadequate context. Conversely, with rich, well-managed context, even simple prompts can produce remarkable results.

The Strategic Importance of Context

Context engineering marks a fundamental shift in AI development and deployment. Rather than optimizing individual prompts, we design systems that continuously provide AI with optimal information at optimal times.

The quality of AI output directly correlates with input quality. By carefully curating what the AI perceives and remembers, context engineering transforms AI from an occasionally helpful tool into a consistently reliable partner. As AI becomes integral to daily operations—from customer support to research and strategic decision-making—those who master context engineering will shape how these systems think, learn, and collaborate with humans.

The future of effective AI interaction lies not in finding perfect prompts, but in building perfect context. This represents the next frontier in human-AI collaboration, where thoughtfully engineered environments enable AI to achieve its full potential as a transformative business tool.

Part II

Tools and Applications

Understanding GenAI models, tools, and how they apply across business functions

Chapter 4: Large language models and tools

Large Language Models (LLMs) have fundamentally transformed how we approach natural language processing and understanding. By synthesizing enormous datasets and leveraging sophisticated neural architectures, these models enable a variety of applications, from drafting text to solving complex problems in specialized fields. This chapter aims to provide a detailed comparison of some of the most notable LLMs currently available, including OpenAI's GPT series, Anthropic's Claude, Google's Gemini series, Deepseek, and Grok. Moreover, we will explore integrated platforms, playgrounds for experimentation, and options for local deployment of models.

4.1 Leading Commercial Models: OpenAI, Anthropic, and Google

4.1.1 OpenAI's GPT Series: Pushing the Boundaries

OpenAI's offerings have continually advanced, with the GPT series representing the current state-of-the-art. GPT build on previous models by significantly enhancing architecture and parameters, resulting in superior capabilities for contextual understanding, multimodal processing (text, image, audio), and nuanced language generation. GPT-5, in particular, offers improved speed and cost-effectiveness compared to GPT-o3, while matching its high intelligence level. These models manage complex tasks, hold longer conversations effectively due to large context windows, and display enhanced creativity and reliability across various subject matters.

For tasks prioritizing efficiency and cost, OpenAI offers models like GPT-5 mini. It retains strong language generation and understanding capabilities but operates with fewer parameters and potentially smaller context windows compared to the flagship models. This makes GPT-4o mini a suitable choice for less demanding scenarios where speed and cost are primary concerns, excelling in environments requiring faster feedback loops or handling high volumes of simpler tasks.

The comparison within the GPT series often involves balancing raw capability against efficiency and cost (GPT-4o mini).

4.1.2 Anthropic's Claude Series: Focus on Enterprise and Safety

Anthropic's Claude series, culminating in models like Claude Sonnet, emphasizes safe, coherent, and reliable language output. Claude models are characterized by their strong performance in complex reasoning, coding, and writing tasks, alongside a focus on reducing harmful responses and maintaining alignment with user intent (Constitutional AI). This makes them well-suited for enterprise applications, professional services, and customer-facing environments where dependability and safety are paramount.

Claude Sonnet offers a balance of intelligence, speed, and cost, demonstrating strong capabilities in areas like programming and creative writing. It features a very large context window and introduces "App Preview," allowing interaction with external tools and APIs. While perhaps less focused on multimodality compared to GPT or Gemini, Claude excels in sophisticated text-based reasoning and generation, making it a strong contender for complex professional workflows.

4.1.3 Google's Gemini Series: Multimodality and Research Integration

Google's Gemini series, including Gemini Pro, emphasizes strong multimodal capabilities from the ground up. These models are engineered to effectively integrate and reason across text, images, video, and audio inputs. Gemini models feature extremely large context windows (up to 2 million tokens experimentally), enabling deep analysis of extensive documents, codebases, or multimedia content. Gemini Pro aims to be particularly efficient, offering high performance at potentially lower costs.

Google also leverages its AI advancements in specialized research tools. NotebookLM acts as a personalized research assistant, grounding its responses in user-provided source materials for enhanced accuracy and trustworthiness. Furthermore, Google explores concepts like the "AI Co-scientist," aiming to accelerate scientific breakthroughs by having AI assist directly in the research process, from hypothesis generation to data analysis (https://research.google/ blog/accelerating-scientific-breakthroughs-with-an-ai-co-scientist/).

4.2 Other Notable Models and Platforms

Beyond the "big three," other significant players are emerging with distinct focuses.

4.2.1 Deepseek: Open Source Powerhouse

Deepseek has gained prominence with models like Deepseek-V3, an open-source Mixture-of-Experts (MoE) model. It offers performance competitive with leading proprietary models, particularly in coding and reasoning tasks, but at a significantly lower cost due to its open nature and efficient architecture. Deepseek's commitment to open source makes its models highly attractive for researchers and developers seeking customization, transparency, and lower operational expenses.R1 is a reasoning model and attract a lot of attention as well. Although the development of R2 is very slow. We would expect it may again surprise us in terms of its performance.

4.2.2 Grok: Real-time Information and Conversational Style

Developed by xAI, Grok aims to differentiate itself with a conversational style often described as witty or rebellious, and access to real-time information, primarily through integration with the X (formerly Twitter) platform. This focus makes it potentially useful for understanding current events, trends, and public discourse.

4.3 Integrated platforms

Platforms that integrate multiple generative AI models offer users the flexibility to interact with various LLMs through a single interface, each catering to specific needs and preferences. These platforms enhance accessibility and streamline the user experience by consolidating diverse AI capabilities.

4.3.1 Poe

Developed by Quora, Poe serves as a centralized platform providing access to multiple AI chatbots, including models such as GPT-4, Claude, and others. It is designed to facilitate seamless interactions with various AI models, allowing users to ask questions, receive instant answers, and engage in back-and-forth conversations.

4.3.2 Coze

Coze is a next-generation AI application and chatbot development platform that enables users, regardless of programming experience, to create and deploy customized chatbots across different social platforms and messaging apps. It focuses on personalization, allowing for adaptation to user-specific preferences and learning from repeated interactions to provide a tailored conversational experience.

4.3.3 Perplexity AI

Perplexity AI is a conversational search engine that combines large language models with search capabilities to provide concise and accurate answers to user queries. It integrates information retrieval with generative AI, allowing users to obtain direct answers to their questions, supported by cited sources.

4.3.4 ChatOne

ChatOne integrates multiple AI models, including GPT, Claude Sonnet, Claude Opus, as well as Gemini models. It allows users to compare responses from different AI chatbots in one place, facilitating a comprehensive understanding of various AI outputs.

4.3.5 You.com

You.com is a search engine that offers a chat-first AI assistant experience while still providing web search capabilities. It introduced AI Modes to offer a tailored interaction experience through its platform, allowing users to switch between various leading AI models, such as OpenAI's GPT, Anthropic's Claude Sonnet & Opus, Google's Gemini, and Llama.

These platforms exemplify the trend of integrating multiple generative AI models into unified interfaces, enhancing user experience by providing diverse AI capabilities tailored to individual needs.

4.4 Playgrounds

Platforms designed to provide access to generative AI models serve as essential tools for developers, researchers, and businesses aiming to explore and test cutting-edge AI technologies. These platforms, such as OpenAI Playground, Google AI Studio, NVIDIA AI Playground, IBM watsonx.ai, and Hugging Face, offer user-friendly interfaces to interact with advanced language models like Llama 3.1, Zephyr, and others. By enabling experimentation with prompts, fine-tuning capabilities, and integration options, they simplify the process of leveraging generative AI for diverse applications. Many of these platforms offer free tiers or trials, fostering accessibility while supporting innovation in AI-driven projects. Their role in democratizing AI aligns with the growing demand for efficient, scalable, and personalized AI solutions across industries.

4.4.1 LLMs arena

LLMs Arena (https://lmsarena.ai/) allows users to directly compare several language models by inputting identical prompts and assessing each model's performance side by side. This open-access resource provides a transparent means of determining which model may best suit specific needs, be it conversational fluency, response accuracy, or domain-specific expertise.

4.4.2 Nvidia build platform

Nvidia's LLM comparison platform (https://build.nvidia.com/) also serves a critical function in helping users evaluate and benchmark language models. Leveraging Nvidia's expertise in hardware acceleration, this platform allows the efficient assessment of different LLMs in terms of speed, accuracy, and efficiency across various tasks.

4.4.3 Google AI Studio

Google AI Studio (https://aistudio.google.com/) offers yet another venue for comparing Google's suite of LLMs, such as Gemini, against other available models. These platforms provide not only benchmarks but also insight into how specific models perform under conditions like limited compute power or handling complex user queries.

4.4.4 Hugging Face

Hugging Face is a platform that hosts a wide array of AI models, including Llama 3.1 and Zephyr. Users can access and test these models through the platform's interface. Hugging Face offers free access to many models, with options for subscription plans that provide additional features.

These platforms provide valuable resources for users interested in exploring and testing generative AI models, each offering unique features and access levels.

4.4.5 OpenAI Playground

OpenAI provides an interactive web-based interface called the OpenAI Playground, allowing users to experiment with their language models, including GPT-3 and GPT-4. Users can input prompts and observe the models' responses in real-time. While OpenAI offers free access with usage limitations, extended access may require a subscription.

4.4.6 IBM watsonx.ai

IBM's watsonx.ai is an enterprise-ready AI studio that offers access to a selection of foundation models, including IBM's own Granite series and other open-source models. Users can build, train, and deploy AI models using this platform. IBM offers a free trial for watsonx.ai, allowing users to explore its capabilities.

4.5 Local Deployment of Language Models

Access to sophisticated language models is often constrained by financial or computational barriers, prompting the development of free alternatives. These alternatives allow users to interact with LLMs without incurring significant costs, albeit with trade-offs in sophistication, accuracy, or data privacy.

For individuals or institutions that prioritize data security and customization, deploying language models locally remains an attractive option. Tools such as LMStudio and Ollama enable users to run LLMs on local servers or personal hardware, circumventing privacy concerns associated with sending data to third-party servers. Local deployment can be particularly advantageous for sectors like healthcare or finance, where privacy and compliance are paramount. Open source models such as Llama 3, developed by Meta, provide open-access models that can be deployed and used for research, education, and limited commercial use. Though these models may not rival GPT-4 in terms of processing power or depth, they provide considerable functionality at no cost, making them particularly useful in educational settings and non-profit applications.

Another noteworthy free alternative is Mixtral by Mistral AI. Mixtral offers competitive performance for basic language understanding and generation tasks. While its dataset size and parameter count are smaller compared to commercially available models, it still offers value by democratizing access to NLP technology.

LMStudio provides a streamlined platform for deploying smaller, fine-tuned versions of major LLMs, ensuring that enterprises can meet their unique requirements without relying on third-party infrastructure. By offering the possibility of adjusting models to specific needs, LMStudio delivers control over both data and model behavior.

Ollama, another local deployment option, emphasizes ease of use. Designed for those with limited technical expertise, it provides user-friendly deployment scripts and pre-configured environments. These attributes make Ollama a compelling option for small businesses and individual researchers aiming to leverage the power of LLMs without substantial investments in hardware or specialized talent.

4.5.1 Tutorial:

To install and run generative AI models like Llama 4 in LM Studio, follow these steps:

- Download LM Studio: Visit the LM Studio website and download the installer compatible with your operating system.

- Install LM Studio: Run the downloaded installer and follow the on-screen instructions to complete the installation.

- Launch LM Studio: Open the application after installation.

- Download the Llama 4 Model:

- Navigate to the 'Search' tab within LM Studio.

- Enter "Llama 4" in the search bar.

- Select the desired model variant (e.g., Llama 3.1 8B Instruct).

- Click 'Download' to initiate the model download.

- Load the Model:

- After the download completes, go to the 'AI Chat' section.

- Click on 'Select a model to load' and choose the downloaded Llama 3 model.

- The model will load, allowing you to interact with it through the chat interface.

Ensure your system meets the necessary requirements for running large language models to achieve optimal performance.

Chapter 5: API integration

5.1 Understanding APIs

An Application Programming Interface (API) is a set of protocols and tools that allow different software applications to communicate and share functionalities. APIs enable developers to integrate external services or data into their applications without building those services from scratch.

Why and When We Use APIs:

- Integration of Services: APIs allow different software systems to work together by enabling them to share data and functionalities. For example, a weather app on your phone integrates weather data from an external service through an API, saving time and resources.

- Extending Functionality: APIs help developers add advanced features to applications without building them from scratch. For instance, a company might use payment gateway APIs (like Stripe or PayPal) to incorporate secure payment processing.

- Automation and Efficiency: APIs enable automation by allowing applications to communicate without manual intervention. For example, APIs can automate workflows, such as synchronizing CRM software with email marketing platforms.

- Data Sharing: APIs provide standardized ways to share data between applications, making it easier to build tools that depend on external or internal datasets.

Why APIs Are Needed in a Company Context:

- Streamlined Operations: Companies use APIs to connect various internal systems, such as HR, CRM, and inventory management tools, ensuring consistent and efficient workflows.

- Scaling and Innovation: APIs allow businesses to access and integrate third-party services, such as AI tools, analytics platforms, or cloud computing resources, to scale operations and stay competitive.

- Customer Experience: APIs power customer-facing applications by integrating features like chat support, location services, and payment processing, enhancing user experience and satisfaction.

- Collaboration: In large organizations, APIs enable different departments or teams to access and use shared services, fostering collaboration without duplicating efforts.

Why APIs Are Useful in a Personal Context:

- Simplifying Daily Tasks: APIs allow personal applications to access useful services, like weather forecasts, navigation, or financial tracking, seamlessly integrating them into daily routines.

- Customization: Individuals can use APIs to personalize their tools or automate tasks. For example, APIs in platforms like IFTTT or Zapier let users create workflows to connect their favorite apps.

- Learning and Experimentation: APIs are invaluable for students, developers, and hobbyists who want to experiment with building projects, accessing external services, or learning about programming.

5.2 Implementation steps

Implementing an API involves several steps:

- Understanding Requirements: Clearly define the functionalities needed and identify suitable APIs that provide these services.

- Accessing Documentation: Review the API's documentation to comprehend its capabilities, endpoints, authentication methods, and usage limitations.

- Obtaining Access Credentials: Register with the API provider to receive necessary credentials, such as API keys or tokens, which authenticate your application's requests.